Custom Robots.txt file for Blogger/Blogspot blogs for SEO

Blogger now lets you edit your blog's robots.txt file. What are Robots.txt files, robots files are plain text files which instruct the search engines what to not index. Robot.txt is a way to tell search engines whether they are allow to index a page in the search result or not. The bots are automatic, and before they could access your site, they check the Robot.txt file to make sure whether they are allowed to crawl this page or not.

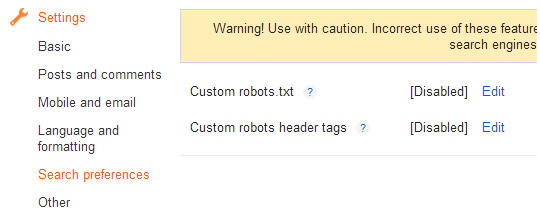

How to Enable Custom Robots.txt in Blogger

1. Blogger Dashboard >> Select Blog >> Select Settings tab >> Search Preferences

2. Click on Edit in front of Custom robots.txt

3. Click on Yes and proceed to next step.

3. Next a box comes up and paste following given code.

User-agent: Mediapartners-Google4. and finally click on Save Changes button to save code.

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://YOURBLOGURL.blogspot.com/feeds/posts/default?orderby=UPDATED

I hope this tip would help you. If you are having any issue regarding crawling then, feel free to leave your questions in the comments below and I would try to help you in solving them.